Uncertainty can be confounding when thinking rationally. After many reasoned steps forward you may arrive at a fork in the road where your imperfect knowledge of the facts prevents you from knowing the correct direction of your next step. And if it's wrong, all subsequent steps will be wrong too even if they are each executed with impeccable logic.

Twice in the 20th century fundamental discoveries of uncertainty shocked science. First in quantum physics when it was realised that you can never be certain of all properties of a particle. For example, you cannot know both where a particle is and where it is going. And from perplexingly erratic computer models of the atmosphere came chaos theory, which said that tiny and unknowable errors in the current state of a system can lead to a myriad of very different future outcomes — the so-called butterfly effect.

Although some physicists, including Einstein, were tempted to reject quantum uncertainty, they did accept it in time, and it blossomed into new avenues of research in particle physics, mathematics and beyond.

Chaos theory also found applications in many disciplines, and even gave rise to fractal works of art such the the famous Mandelbrot set.

The Mandelbrot set. Screenshot from xaos.

Uncertainty is a fundamental fact of life that should not only be accepted but embraced. I first met it in science, but it completely wrapped its arms and tentacles around me when I took an interest in the maelstrom of politics, society and economics.

But let me start with the science.

Sunspots

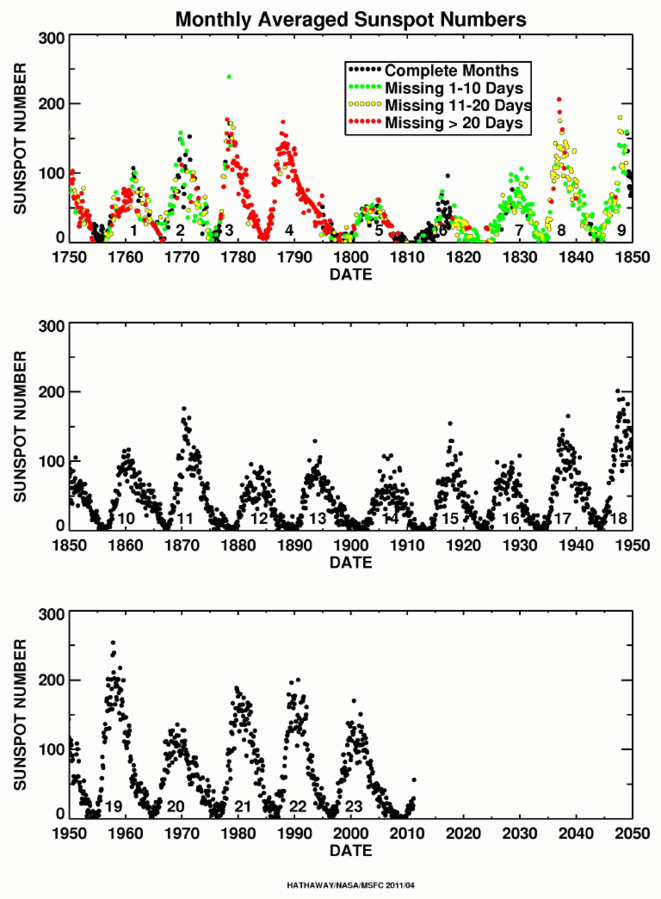

Rudolf Wolf introduced a system for recording the number of sunspots in 1848 which was adopted by observatories across the world. I believe it to be the oldest international collaboration in science. Data from before 1848 is far more uncertain but is still an important part of the record.

My PhD was on analysing and predicting solar activity using neural networks and other weird and wonderful methods. In return for generous funding, I had to report my progress to the European Space Agency (ESA) at regular intervals. As a young, rather startled researcher I could manage little more than an embarrassed "dunno" at first, but I soon learned how best to present examples of my predictions with a few sentences outlining why the uncertainties were so enormous. In some cases the methods could even predict a negative number of sunspots on the Sun, which is, of course, silly.

My academic elders were patient because they understood that an essential part of a PhD — an academic rite of passage even — is in dealing with the bewildering uncertainties involved in real-world research.

My goal was to reduce the size of those uncertainties, but to do that required estimating and understanding them. Was it because the number of spots on the Sun (and other related time series) were inherently unpredictable, or was it simply that our prediction techniques were rubbish? As you might expect, it was a bit of both.

(Incidentally, I did publish a prediction in 1995 that the next solar maximum in 2000 would be smaller than the two before it, which turned out to be right, but as it's only one data point I'm not over-egging this claim.)

Solar flares

A solar flare is an event that takes place in the Sun's atmosphere. It releases a huge amount of energy across all wavelengths of light and in the form of fast particles. A typical flare lasting a few minutes could, if we had means of harnessing it, supply our civilisation's energy needs for a year. Big events release more energy than we've used in all human history. For those who like numbers, a big flare releases around 1025 Joules.

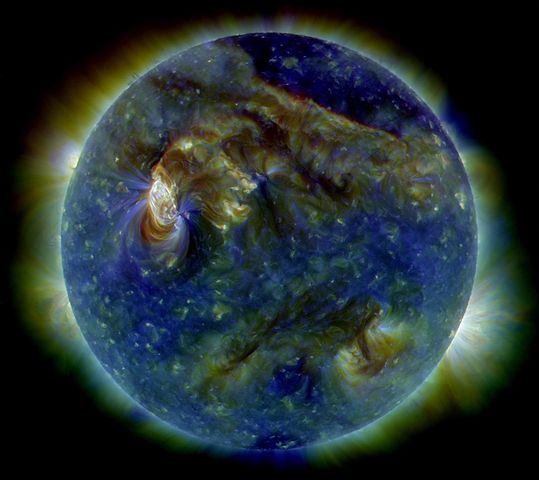

In addition to being mind-bogglingly powerful, solar flares can be quite pretty.

This is an ultraviolet image of our star, the Sun — the white patch at the 10 O'clock position in the image is a solar flare (I'm afraid Hard X-ray images are much less artful). Note how the structures are reminiscent of fractals. From NASA/SDO/AIA - NASA Image of the Day, Public Domain, Link

Once I'd gained my PhD and started supervising PhD students of my own, I found myself working on making images of solar flares in hard X-rays (shortest wavelength and highest energy form of X-rays) for NASA's RHESSI space mission. The snag with this is you can't do optics for such high energy forms of light. My favourite analogy is that making an image from hard X-rays is like playing table tennis, blind-folded with an opponent that's firing bullets at you. Not only that, you have to figure out what he or she might look like from the bullets you managed to deflect with your reinforced bat.

Well, it wasn't as dangerous as that of course. Less dramatically, imaging in Hard X-rays is akin to ultrasound imaging of a foetus in the womb, or echo location used by bats. And here we return to uncertainty. The images of solar flares that we were able to reconstruct were not unique. Given the raw data from observing a particular solar flare with the RHESSI satellite, our Earth-bound computers and algorithms were capable of reconstructing many very different images.

So what to do? Shrug and give up? No, of course not. The data was telling us something, but careful work was needed. When you peer at an ultrasound image and have trouble seeing the baby, that's your brain struggling to make sense of uncertain information even with the aid of significant computer power.

For solar flares we concentrated on extracting what reliable information we could from this highly uncertain data and then used it to sleuth our way towards a better set of models of what was going on in the solar atmosphere.

The big problem in solar physics was that to produce X-rays in the quantities seen, the models required impossibly large amounts of energy in the Sun's atmosphere. In other words, there was a big energy budget deficit.

Couldn't we just blame our very uncertain data? No, because we knew what the uncertainties were from well-proven statistical theory and we could do sense checks against information from other observations of the Sun. The bottom line was that we were forced to accept the data was good to within measurable uncertainties and improve the models.

This is fine. Every good scientist should seek to falsify models with data. Especially their own. That's how you improve models and progress science. Of course, scientists who developed them may well be unhappy if new data demolishes their life's work, but many will take it on the chin and see the bigger picture. Even if they don't, their younger peers will in time. Science proceeds one funeral at a time, as physicist Max Planck once said (well, almost said in more long-winded German prose).

Society

I left my science career behind many years ago. I won't go into the details on why here, except to say that leaving a secure and successful career was not a trivial decision, and that despite great uncertainty about my own future, I felt compelled to venture out and explore a wider world with the skills I'd picked up.

These days I'm studying all kinds of public data on tax, spending and the economy. I was motivated initially by the 2008 financial crisis but more recently by upheavals in British politics. The uncertainties here, such as on tax receipts or GDP, are usually no more than a few percent. In astronomy, it was not uncommon in a new line of research to be faced with "order of magnitude" uncertainties and to an astronomer that means a factor of ten. You read that correctly. We had to work with numbers that might be a factor of ten too small or too large, or if you prefer percentages ±1000%.

So coming from my astronomical perspective, public data on public spending, tax and the economy are blissfully accurate. Well, the numbers are, but the uncertainty extends beyond accuracy of numbers.

Economic data would be meaningless without models of how it relates to the society around us and the history that's brought us to this point. And of course there are ideas to consider for the future, such as basic income, a job guarantee and new forms of credit and money that may reinvent that old bugbear of society, debt.

In other words, unlike astronomy and physical sciences, data on our society cannot be separated from ideas about what our society should be like and the spectrum of moral values of its people. If you are libertarian, you will view current public spending as too high; to a socialist, it will seem too low.

There's no economic model or paradigm of society and politics that fits the data. But as the data is uncertain, it's tempting for us humans — even highly intelligent and educated ones — to twist the data, disguise aspects we don't like with complexity, and even ignore particularly awkward bits, all to make a favourite model or ideology fit. But that would be fooling yourself, and if you are a persuasive communicator, you might fool others too, at least for a while until reality clobbers you.

But not only is our knowledge of reality uncertain, a society, just like the individuals that form it, is quite capable of behaving irrationally and might react to similar circumstances in surprisingly different ways at different times. Even a well-intentioned government that takes an evidenced-based approach to policy cannot be sure that its actions will fulfill its aims.

Bertrand Russell probably best explained how we should deal with uncertainty:

I think nobody should be certain of anything. If you're certain, you're certainly wrong because nothing deserves certainty. So one ought to hold all one's beliefs with a certain element of doubt, and one ought to be able to act vigorously in spite of the doubt... One has in practical life to act upon probabilities, and what I should look to philosophy to do is to encourage people to act with vigour without complete certainty.

I believe it should be the task of all citizens to try and think through the reality of their society and face up to uncertainty without letting it cloud what can be established with some certainty. If you don't attempt to do this then you're effectively placing blind trust in your peers, or worse still, in your government. Either way, you may well regret it when they begin acting with vigour in a way that you do not like.